Together AI Products | Together Inference, Together Fine-Tuning, Together Training, and Together GPU Clusters

Extracto

Together AI has a suite of powerful products that help you build cutting-edge generative AI models in a cost-efficient way. What’s more – the models you create will be private and owned by you.

Contenido

Fastest cloud platform

for building and running

generative AI.

speed relative

to tgi, vllm or

other inference services

llama-2 70b

cost relative to gpt-3.5-turbo

01

Flash-Decoding dramatically speeds up attention in the Together Inference Engine while FlashAttention helps with the time to first token — up to 8x faster generation for very long sequences7. We achieve these by carefully designing how keys and values are loaded in parallel while separately rescaling and combining results to maintain the right attention outputs.

02

Using CUDA graphs greatly reduces the overhead of launching GPU operations in the Together Inference Engine. It saves time by using a mechanism to launch multiple GPU operations through a single CPU operation.

03

Developed by our expert research team, the Together Inference Engine layers on multiple techniques. As we do this, we make painstaking optimizations to ensure that we get unmatched efficiency.

control

Privacy settings put you in control of what data is kept and none of your data will be used by Together AI to train new models, unless you explicitly opt in to share it.

autonomy

When you fine-tune or train a model with Together AI the resulting model is your own private model. You own it.

security

Together AI offers flexibility to deploy in a variety of secure clouds for enterprise customers.

Customize leading open-source models with your own private data.

Achieve higher accuracy on your domain tasks.

Customize leading open-source models with your own private data.

Start by preparing your dataset — one row per label in a .jsonl file, following the prompt template of the model you are fine-tuning.

{"text": "<s>[INST] <<SYS>>\\n{your_system_message}\\n<</SYS>>\\n\\n{user_message_1} [/INST]"} {"text": "<s>[INST] <<SYS>>\\n{your_system_message}\\n<</SYS>>\\n\\n{user_message_1} [/INST]"}Validate that your dataset has the right format and upload it.

together files check $FILE_NAME together files upload $FILE_NAME { "filename" : "acme_corp_customer_support.json", "id": "file-aab9997e-bca8-4b7e-a720-e820e682a10a", "object": "file" }Begin fine-tuning with a single command — with full control over hyper parameters.

together finetune create --training-file $FILE_ID --model $MODEL_NAME --wandb-api-key $WANDB_API_KEY --suffix v1 --n-epochs 10 --n-checkpoints 5 --batch-size 8 --learning-rate 0.0003 { "training_file": "file-aab9997-bca8-4b7e-a720-e820e682a10a", "model_output_name": "username/togethercomputer/llama-2-13b-chat", "model_output_path": "s3://together/finetune/63e2b89da6382c4d75d5ef22/username/togethercomputer/llama-2-13b-chat", "Suffix": "Llama-2-13b 1", "model": "togethercomputer/llama-2-13b-chat", "n_epochs": 4, "batch_size": 128, "learning_rate": 1e-06, "checkpoint_steps": 2, "created_at": 1687982945, "updated_at": 1687982945, "status": "pending", "id": "ft-5bf8990b-841d-4d63-a8a3-5248d73e045f", "epochs_completed": 3, "events": [ { "object": "fine-tune-event", "created_at": 1687982945, "message": "Fine tune request created", "type": "JOB_PENDING", } ], "queue_depth": 0, "wandb_project_name": "Llama-2-13b Fine-tuned 1" }Monitor results on Weights & Biases, or deploy checkpoints and test them through the Together Playgrounds.

Together fine-tuning

Fine-tune models with your data.

Host your fine-tuned model for inference when it’s ready.

Together Custom Models is designed to help you train your own state-of-the-art AI model.

Benefit from cutting-edge optimizations in the Together Training stack like FlashAttention-2.

Once done the model is yours. You retain full ownership of the model that is created, and you can run your model wherever you please.

Together Custom Models helps you through all stages of building your state-of-the-art AI model:

01. Start with data design.

Incorporate quality signals from RedPajama-v2 (30T tokens) into your model to boost its quality.

Choose data based on similarity to Wikipedia, amount of code, or how often the text uses bullets for brevity. For more details on the quality slices in RedPajama-v2 read the blog post.

Leverage advanced data selection tools like DSIR to select data slices and then optimize the amount of each slice used with DoReMi.

02. Select model architecture & training recipe.

We provide proven training recipes for instruction-tuning, long context optimization, conversational chat, and more.

Work in collaboration with our team of experts to determine the optimal architecture and training recipe.

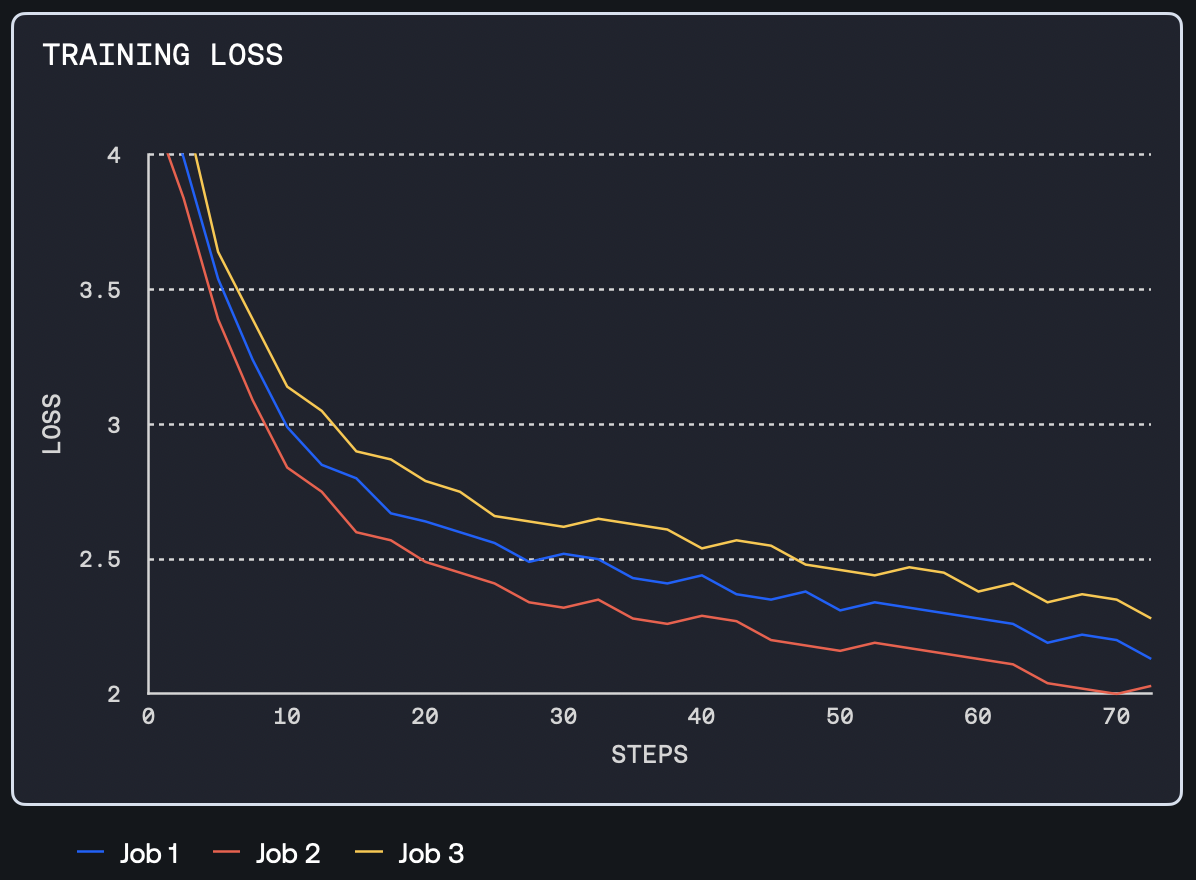

03. Train your model.

Press go. Together Custom Models schedules, orchestrates, and optimizes your training jobs over any number of GPUs.

Up to

9x faster training

with FlashAttention-29

Up to

75% lower cost

than training on AWS10

04. Tune and align your model.

Further customize and tailor your model to follow instructions and your business rules.

05. Evaluate model quality.

Evaluate your final model on public benchmarks such as HELM and LM Evaluation Harness, and your own custom benchmark — so you can iterate quickly on model quality.

Together custom models

Build models from scratch

We love to build state-of-the-art models. Use Together Custom Models to train your next generative AI model.

Performance metrics

training horsepower

relative to aws

training speed

Benefits

Scale infra – at your pace

Start with as little as 30 days — and expand at your own pace. Scale up or down as your needs change — from 16 GPUs to 2048 GPUS.

SNAPPY SETUP. BLAZING FAST TRAINING.

We value your time. Your cluster comes optimized for distributed training with the high performance Together Training stack and a setup Slurm cluster out of the box. You focus on your model and we’ll ensure everything runs smoothly. ssh in, download your data, and start training.

EXPERT SUPPORT

Our team is dedicated to your success. Our expert team will help unblock you, whether you have AI or system issues. Guaranteed uptime SLA and support included with every cluster. Additional engineering services available when needed.

Hardware specs

A100 PCIe Cluster Node Specs

- 8x A100 / 80GB / PCIe

- 200Gb non-blocking Ethernet

- 120 vCPU Intel Xeon (Ice Lake)

- 960GB RAM

- 7.68 TB NVMe storageA100 SXM Cluster Node Specs

- 8x NVIDIA A100 80GB SXM4

- 200 Gbps Ethernet or 1.6 Tbps Infiniband configs available

- 120 vCPU Intel Xeon (Sapphire Rapids)

- 960 GB RAM

- 8 x 960GB NVMe storageH100 Clusters Node Specs

- 8x Nvidia H100 / 80GB / SXM5

- 3.2 Tbps Infiniband network

- 2x AMD EPYC 9474F 18 Cores 96 Threads 3.6GHz CPUs

- 1.5TB ECC DDR5 Memory

- 8x 3.84TB NVMe SSDs

Customers Love Us

“Together GPU Clusters provided a combination of amazing training performance, expert support, and the ability to scale to meet our rapid growth to help us serve our growing community of AI creators.”