Qwen3-TTS Family is Now Open Sourced: Voice Design, Clone, and Generation

Extracto

I haven't been paying much attention to the state-of-the-art in speech generation models other than noting that they've got really good, so I can't speak for how notable this new …

Resumen

Resumen Principal

La familia Qwen3-TTS ha sido lanzada como una solución open source bajo la licencia Apache 2.0, marcando un hito significativo en la tecnología de síntesis de voz. Estos modelos avanzados de texto a voz (TTS) destacan por ser multilingües, controlables, robustos y capaces de generar voz en streaming en tiempo real, gracias a una innovadora arquitectura LM de doble vía. Su entrenamiento con más de 5 millones de horas de datos de habla en diez idiomas diferentes les permite ofrecer un rendimiento de estado del arte en diversas métricas. La característica más revolucionaria es la clonación de voz en solo 3 segundos y el control basado en descripciones, que permite a los usuarios no solo replicar voces existentes, sino también crear voces completamente nuevas o manipular las características del habla con gran precisión. Esta liberación democratiza el acceso a capacidades avanzadas de generación de voz, haciéndolas accesibles para desarrolladores y usuarios finales a través de una demo en Hugging Face y herramientas CLI.

Elementos Clave

- Tecnología de Voz Multilingüe y Controlable: La serie Qwen3-TTS se presenta como una familia de modelos de texto a voz (TTS) avanzados que son multilingües, controlables, robustos y de streaming. Han sido entrenados con más de 5 millones de horas de datos de voz que abarcan 10 idiomas, asegurando una amplia cobertura lingüística y un rendimiento superior.

- Clonación de Voz en 3 Segundos y Manipulación Descriptiva: Una de las capacidades más destacadas es la **clonación

Contenido

Qwen3-TTS Family is Now Open Sourced: Voice Design, Clone, and Generation (via) I haven't been paying much attention to the state-of-the-art in speech generation models other than noting that they've got really good, so I can't speak for how notable this new release from Qwen is.

From the accompanying paper:

In this report, we present the Qwen3-TTS series, a family of advanced multilingual, controllable, robust, and streaming text-to-speech models. Qwen3-TTS supports state-of- the-art 3-second voice cloning and description-based control, allowing both the creation of entirely novel voices and fine-grained manipulation over the output speech. Trained on over 5 million hours of speech data spanning 10 languages, Qwen3-TTS adopts a dual-track LM architecture for real-time synthesis [...]. Extensive experiments indicate state-of-the-art performance across diverse objective and subjective benchmark (e.g., TTS multilingual test set, InstructTTSEval, and our long speech test set). To facilitate community research and development, we release both tokenizers and models under the Apache 2.0 license.

To give an idea of size, Qwen/Qwen3-TTS-12Hz-1.7B-Base is 4.54GB on Hugging Face and Qwen/Qwen3-TTS-12Hz-0.6B-Base is 2.52GB.

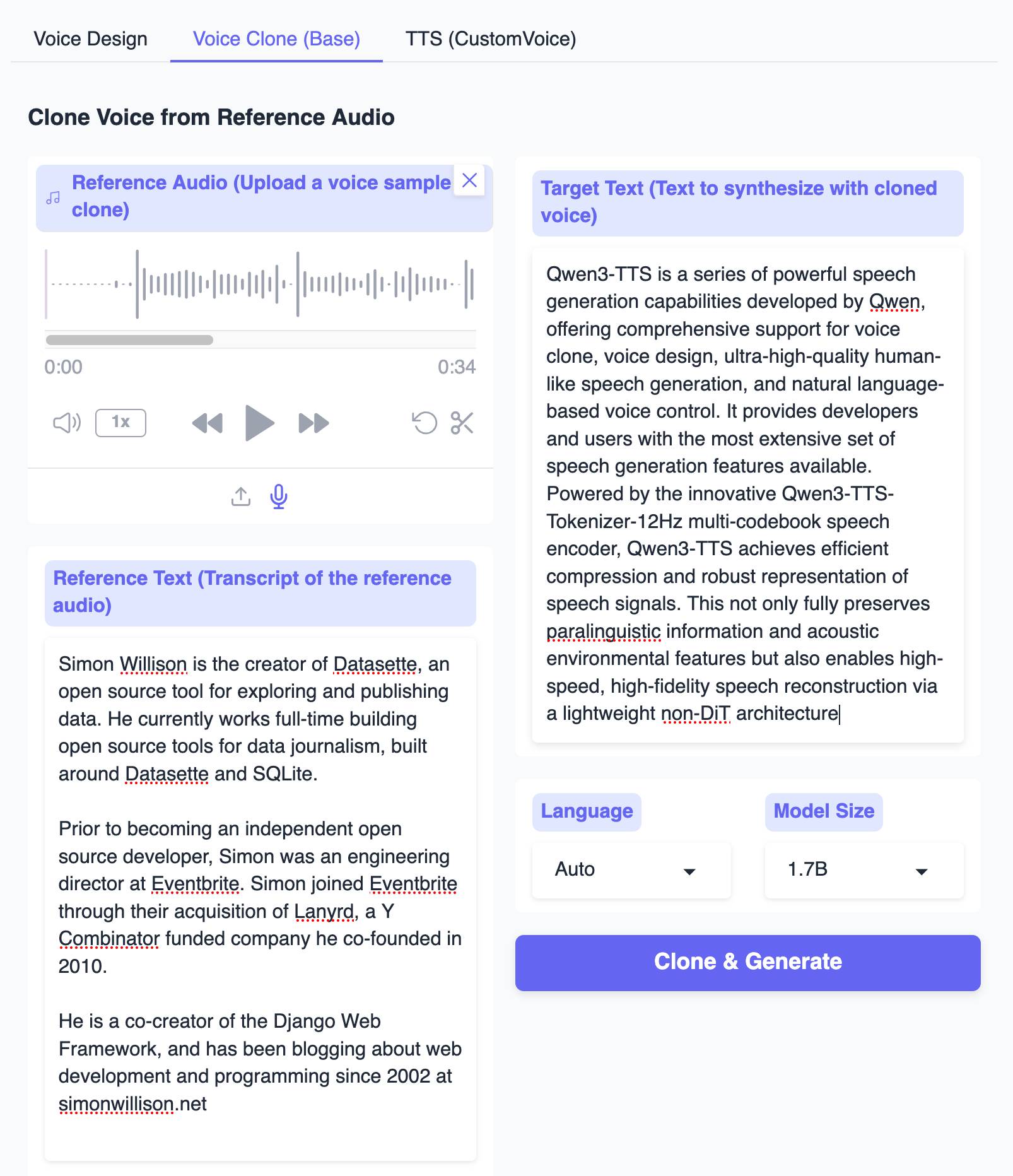

The Hugging Face demo lets you try out the 0.6B and 1.7B models for free in your browser, including voice cloning:

I tried this out by recording myself reading my about page and then having Qwen3-TTS generate audio of me reading the Qwen3-TTS announcement post. Here's the result:

It's important that everyone understands that voice cloning is now something that's available to anyone with a GPU and a few GBs of VRAM... or in this case a web browser that can access Hugging Face.

Update: Prince Canuma got this working with his mlx-audio library. I had Claude turn that into a CLI tool which you can run with uv ike this:

uv run https://tools.simonwillison.net/python/q3_tts.py \

'I am a pirate, give me your gold!' \

-i 'gruff voice' -o pirate.wav

The -i option lets you use a prompt to describe the voice it should use. On first run this downloads a 4.5GB model file from Hugging Face.

Fuente: Simon Willison’s Weblog